I posted a lot about free threading in the past year:

Over all I had 3 issues with free-threading:

- Reference count contention hurts performance on even immutable objects.

- Often benign code abstractions can hurt multi-threading performance …

I posted a lot about free threading in the past year:

Over all I had 3 issues with free-threading:

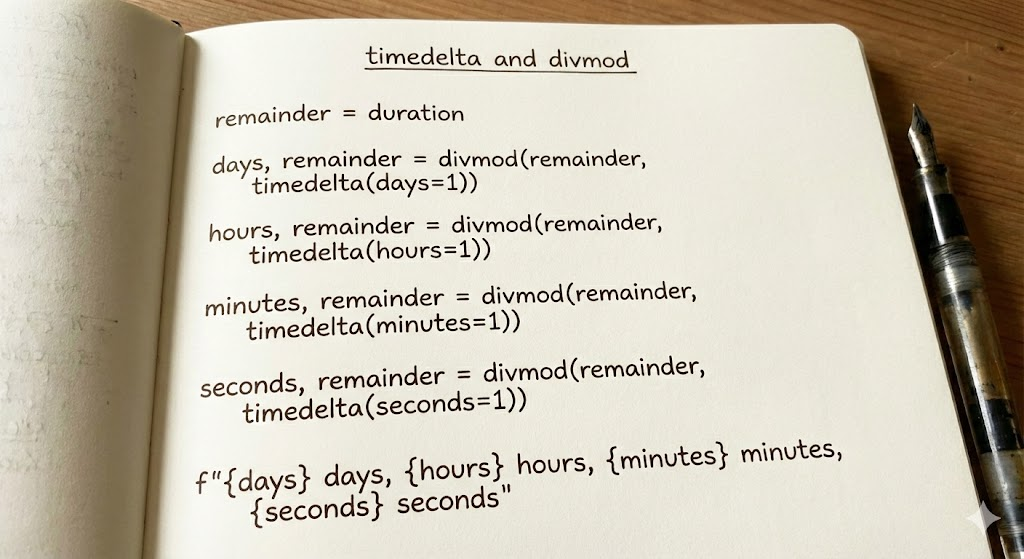

Working with datetime is hard and error prone, but Python actually provides the right tools with timedelta to work with them.

It's a shame that most developers are not making the best use of it.

Let's first catch up what the basic operations are:

Now, let's …

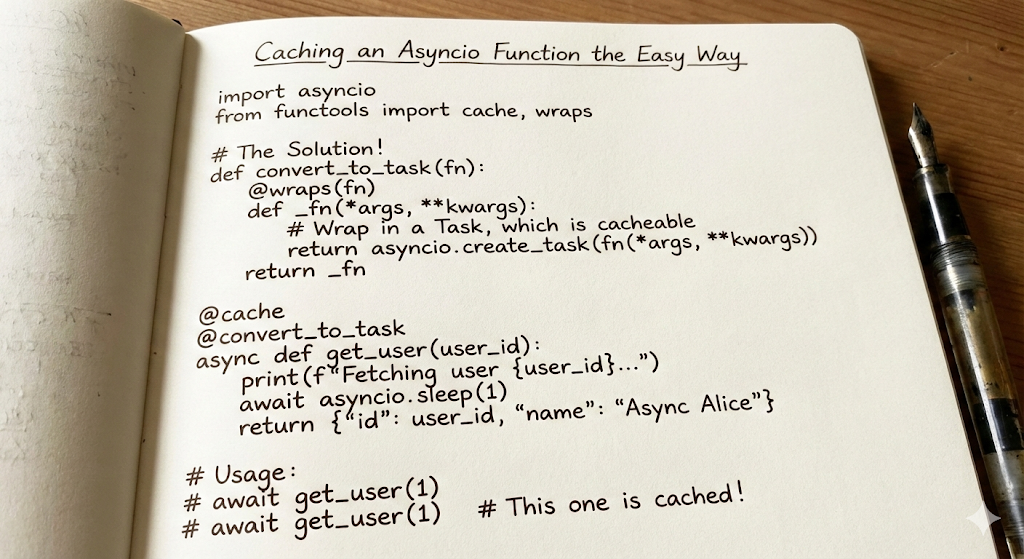

read moreIf you've used asyncio for some time you've probably noticed a few things that work differently to the synchronous counter parts.

I recently had to add some caching to an asyncio function. Let's say something like:

I recently had to add some caching to an asyncio function. Let's say something like:

async def get_user(user_id: UUID) -> User:

...

Of course naturally we want to use functools …

read moreI've been on vacation in China leading up the lunar new year and I'd rather not spend all my time researching some heavy topic. However, I thought I might instead spend a few minutes combining my previous posts on marimo notebooks and the Lunar calendar.

read more

Recently I've been updating some of my libraries to Python 3.10+ after Python 3.9 has finally reached end of life.

The upgrade to Python 3.10 is a relatively simple one, but I thought it be a good idea to run tests on different versions of Python.

Recently I've been working on framework to run LLM tasks using AWS's excellent SQS. And I made the decision to write my own task framework/library as opposed to using a pre-exiting framework. I thought this would be a great opportunity to discuss the considerations and levels of abstractions involved …

read moreDon't listen to random benchmarks..

I recently came across an article benchmarking Python performances in web frameworks, comparing asyncio and sync performance.

The author sets out to measure performance of FastAPI/Django web servers running with postgresql comparing async and non-async workloads. The methodology is pretty reasonable, the following is …

read more... but that's actually a good thing

I've already written a ton about subinterpreters since a year ago:

Recently I had some time to dig a bit deeper into subinterpreters and check my understanding …

read more